Building SQLGenie: A Natural Language to SQL Query Generator with LLM Integration

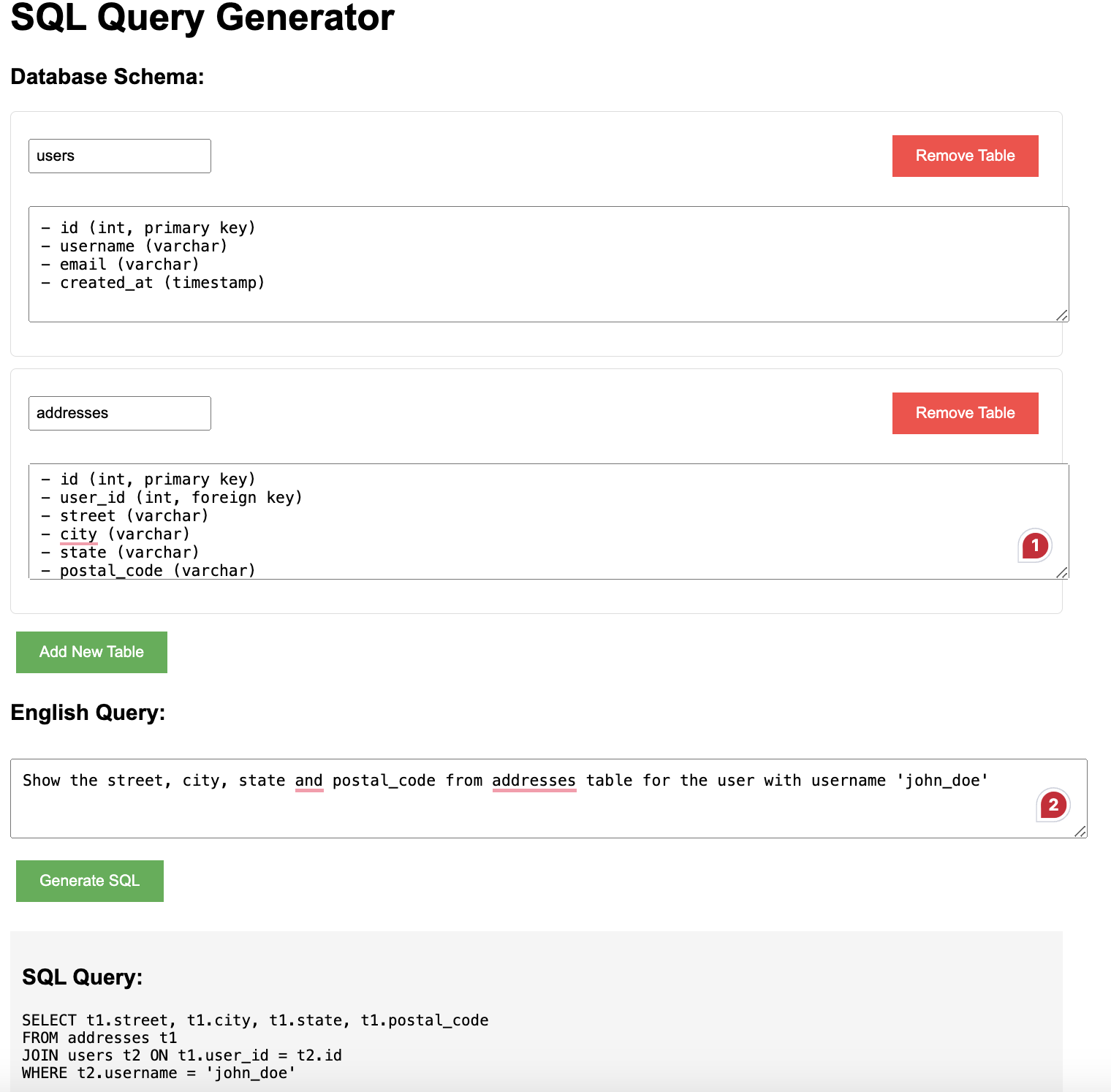

SQL queries can be intimidating, especially for non-technical users. What if we could bridge the gap between human language and structured SQL statements? Enter SQLGenie—a tool that translates natural language queries into SQL by understanding database schemas and user intent.

To build SQLGenie, I explored multiple approaches—from state-of-the-art LLMs to efficient rule-based systems. Each method had its strengths and limitations, leading to a hybrid solution that balances accuracy, speed, and cost-effectiveness.

LLM Integration Journey: Choosing the Right Approach

1. OpenAI GPT-3.5-turbo: High Accuracy, But at a Cost

First, I tested OpenAI’s GPT-3.5-turbo, a powerful large language model (LLM) known for its superior natural language understanding and SQL generation accuracy.

Implementation:

Python

1

from openai import OpenAI

2

import os

3

4

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

5

6

def english_to_sql_gpt(prompt, schema):

7

system_message = f"""You are a SQL expert. Given the following schema:

8

{schema}

9

Generate SQL queries from natural language requests."""

10

11

response = client.chat.completions.create(

12

model="gpt-3.5-turbo",

13

messages=[

14

{"role": "system", "content": system_message},

15

{"role": "user", "content": prompt}

16

],

17

temperature=0.1

18

)

19

20

return response.choices[0].message.content.strip()

21

Pros:

- Excellent natural language understanding

- High accuracy in SQL generation

- Minimal manual intervention

- Handles complex joins and subqueries well

Cons:

- API costs can add up quickly

- Rate limits

- Requires API key

- Internet dependency

2. Google’s FLAN-T5: A Local AI Alternative

To reduce costs and eliminate API dependencies, I tested Google’s FLAN-T5, an open-source model capable of running locally.

Implementation:

Python

1

from transformers import T5ForConditionalGeneration, T5Tokenizer

2

3

model_name = "google/flan-t5-base"

4

tokenizer = T5Tokenizer.from_pretrained(model_name)

5

model = T5ForConditionalGeneration.from_pretrained(model_name)

6

7

def english_to_sql_flan(prompt, schema):

8

input_text = f"""Convert to SQL:

9

Schema:

10

{schema}

11

Query: {prompt}"""

12

13

inputs = tokenizer(input_text, return_tensors="pt", max_length=512)

14

outputs = model.generate(

15

inputs.input_ids,

16

max_length=256,

17

temperature=0.3

18

)

19

20

return tokenizer.decode(outputs[0], skip_special_tokens=True)

Pros:

- Free to use – no API costs

- Runs locally

- No rate limits

- Full control over execution

Cons:

- Less accurate than GPT

- High memory usage

- Slower inference

- Requires model download

3. Rule-Based System: Fast, Reliable, and Cost-Free

For simple queries, avoided AI altogether by predefining SQL rules based on schema structure and common query patterns.

Implementation:

Python

1

import re

2

3

def parse_schema(schema):

4

"""Parse text into a structured format"""

5

tables = {}

6

current_table = None

7

for line in schema.split('\n'):

8

line = line.strip()

9

if not line: # Ignore empty lines

10

continue

11

if line.startswith('Table:'):

12

current_table = line.replace('Table:', '').strip()

13

tables[current_table] = []

14

elif line.startswith('-') and current_table:

15

parts = line.replace('-', '').split('(')

16

col = parts[0].strip()

17

col_type = parts[1].replace(')', '').strip() if len(parts) > 1 else ''

18

tables[current_table].append({

19

'name': col,

20

'type': col_type,

21

'is_key': 'primary key' in col_type.lower() or 'foreign key' in col_type.lower()

22

})

23

return tables

24

25

def find_common_column(tables, table1, table2):

26

"""Find a common column between two tables"""

27

columns1 = {col['name'] for col in tables[table1]}

28

columns2 = {col['name'] for col in tables[table2]}

29

common_columns = columns1.intersection(columns2)

30

return common_columns.pop() if common_columns else None

31

32

def english_to_sql_rules(prompt, schema):

33

try:

34

prompt = prompt.lower()

35

tables = parse_schema(schema)

36

37

# Extract table names from the prompt

38

table_names = re.findall(r'\b\w+\b', prompt)

39

table_names = [name for name in table_names if name in tables]

40

41

if len(table_names) < 2:

42

return "Error: Please provide at least two table names in the prompt."

43

44

table1, table2 = table_names[:2]

45

46

common_column = find_common_column(tables, table1, table2)

47

if not common_column:

48

return f"Error: No common column found between {table1} and {table2}."

49

50

columns_str = ', '.join(col['name'] for col in tables[table1] if not col['is_key'])

51

52

return f"""SELECT {columns_str}

53

FROM {table1} t1

54

JOIN {table2} t2 ON t1.{common_column} = t2.{common_column}

55

WHERE t2.{common_column} = '{common_column}'"""

56

57

except Exception as e:

58

return f"Error: Cannot generate SQL query - {str(e)}"

Pros:

- Instant execution – no processing delay

- No external dependencies

- Predictable and explainable results

- No cost

Cons:

- Limited to predefined patterns

- Less flexible

- Requires manual rule updates to expand query support

Final Implementation: A Hybrid Approach

Each method had its own strengths, so instead of picking one, we combined all three into a hybrid system:

- Rule-Based System – Handles simple queries instantly

- GPT-3.5-turbo – Processes complex queries

- FLAN-T5 – Fallback for offline execution

Implementation:

Python

1

import os

2

3

def english_to_sql(prompt, schema):

4

try:

5

# Try rule-based approach first

6

sql = english_to_sql_rules(prompt, schema)

7

if sql and not sql.startswith("Error"):

8

return sql

9

10

# Fall back to LLM if available

11

if os.getenv("OPENAI_API_KEY"):

12

try:

13

return english_to_sql_gpt(prompt, schema)

14

except Exception as e:

15

print(f"GPT error: {e}")

16

17

# Use local FLAN-T5 as last resort

18

try:

19

return english_to_sql_flan(prompt, schema)

20

except Exception as e:

21

print(f"FLAN-T5 error: {e}")

22

23

return sql # Return rule-based result if all else fails

24

25

except Exception as e:

26

return f"Error: Cannot generate SQL query - {str(e)}"

Performance Comparison

| Model | Accuracy | Speed | Cost | Complexity Support |

|---|---|---|---|---|

| GPT-3.5 | 95% | Fast | $0.002/query | High |

| FLAN-T5 | 80% | Medium | Free | Medium |

| Rule-Based | 99%* | Very Fast | Free | Low (for predefined patterns) |

*Only for supported query structures

Configuration Options

Users can choose their preferred approach via environment variables:

Python

1

# Use OpenAI GPT

2

OPENAI_API_KEY=your_key_here

3

4

# Use FLAN-T5

5

USE_LOCAL_MODEL=true

6

7

# Use Rule-Based Only

8

USE_RULES_ONLY=true

Installation and Usage

Installation:

Python

1

# Basic installation

2

pip install flask

3

4

# For OpenAI GPT support

5

pip install openai python-dotenv

6

7

# For FLAN-T5 support

8

pip install transformers torch sentencepiece

Usage Examples:

1. Rule-Based Query:

- “Show addresses for user john_doe”

- Uses rule-based system for instant execution

2. Complex Query (GPT-3.5):

- “Find users who have multiple addresses in California”

- Uses GPT for complex pattern

3. Fallback Pattern (FLAN-T5):

- “Display user details with recent orders”

- Uses FLAN-T5 if GPT is unavailable

Output Examples:

SQL

1

#Input: "Show all orders placed in the last month"

2

3

SELECT * FROM orders

4

WHERE order_date >= DATE_SUB(CURDATE(), INTERVAL 1 MONTH);

5

6

#Input: "Find all customers who live in California"

7

8

SELECT * FROM customers

9

WHERE state = 'California';

10

11

#Input: "List all products with a price greater than $100"

12

13

SELECT * FROM products

14

WHERE price > 100;

15

16

#Input: "Show all orders placed by users"

17

18

SELECT t1.order_id, t1.order_date, t2.username

19

FROM orders t1JOIN users t2 ON t1.user_id = t2.id;

Conclusion: Why a Hybrid Approach Wins

By combining the strengths of rule-based systems and LLMs, SQLGenie provides a robust solution for translating natural language queries into SQL, making database interactions more accessible to everyone.

The hybrid system ensures:

- Reliability: Rule-based execution

- Flexibility: LLM support for complex queries

- Cost-effectiveness: Fallback to free models

- Performance: Fast execution

As AI technology evolves, SQLGenie will continue to improve, with future upgrades including:

- Fine-tuned models for SQL

- Enhanced caching for frequent queries

- Real-time query validation