SHAP-Based Explainable AI (XAI) Integration With .Net Application

Think of Explainable AI (XAI) as your friendly guide to a complex machine’s secret thoughts. Instead of leaving you guessing why an algorithm made a certain call, XAI opens the door, points out the important clues, and speaks plainly about what drove its decision. Explainable AI builds trust on the ML decision since it speaks how the decision made, makes the human to believe and to catch and fix the mistakes.

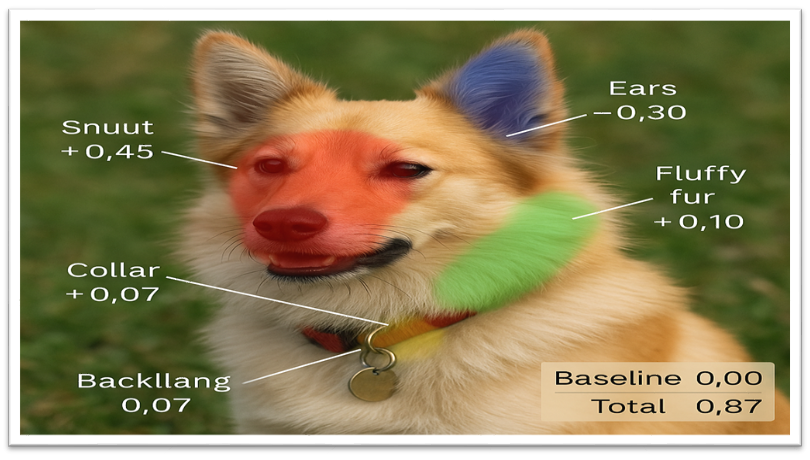

Explanation from Explainable AI:

“I have started at 0%—yet to know the prediction. Spotting that dog’s snout boosted my confidence by 45%, seeing its upright ears added 30%, the fluffy fur another 10%, and the collar a small 7%. A hint of grass slightly pulled me down by 5%. This is a dog and I’m 87% sure about this.”

XAI Methods Categorization

Agnosticity: Whether the Explainable AI (XAI) depend on the type of Machine Learning (ML) model. If the XAI specific to the model then XAI will use the TreeExplainer, Grad-CAM, Integrated Gradients methods based on the model used in the ML model. If it is model agnostic, then LIME, SHAP, Permutation Importance, Counterfactuals methods will be used.

Scope: Whether the XAI method explains the model locally or globally. If it explains locally then it uses LIME, SHAP, Counterfactuals methods. If it explains the model globally it will use Feature-importance, Surrogate Trees, PDP methods.

- Data Type: What data type this XAI works like text, image, structured/tabular data for example.

- Explanation type: What kind of explanation the XAI gives you. Graph, text or image for example.

SHAP XAI Overview

SHAP (SHapley Additive exPlanations) is a technique that each of parameter in dataset will contribute some value and then taking average out of it.

Example : Based on the Dog image.

“My baseline guess was 20%. Seeing the snout in your dog photo bumped me up by 45%, the ears added 30%, fluffy fur another 10%, and collar details gave a small 7%. A bit of busy background took away 5%, leaving me at 87% confidence that it’s a dog.”

SHAP Libraries (.NET framework and Platform Integrations)

- Microsoft InterpretML (Python)

Part of the Azure ML ecosystem; offers a unified API for SHAP (via TreeShap), LIME, and other explainers. - ML.NET OnnxTransformer (.NET)

Use an ONNX-exported model and obtain SHAP values at inference time. - SparkSHAP (Scala / PySpark)

Scales SHAP value computation across Apache Spark clusters for exceptionally large datasets.

Integrate SHAP in .Net Core Application as Rest API

Implementing SHAP Explanations in Python:

- Load data

- Train a model

- Initialize SHAP explainer for the model

- Compute SHAP values for given dataset

- Output the SHAP values for the sample

Python

1

import xgboost as xgb

2

import shap

3

from sklearn.datasets import load_breast_cancer

4

from sklearn.model_selection import train_test_split

5

6

# 1. Load data

7

p, q = load_breast_cancer(return_p_q=True)

8

p_train, p_test, q_train, q_test = train_test_split(p, q, random_state=0)

9

10

# 2. Train a model (XGBoost classifier in this case)

11

model = xgb.XGBClassifier(use_label_encoder=False, eval_metric='logloss')

12

model.fit(p_train, q_train)

13

14

# 3. Initialize SHAP explainer for the model

15

explainer = shap.TreeExplainer(model) # Efficient for tree models like XGBoost:contentReference[oaicite:6]{index=6}

16

17

# 4. Compute SHAP values for provided dataset

18

sample = p_test[0:1] # take one instance to explain

19

shap_values = explainer.shap_values(sample) # shap_values will be array of contributions per feature:contentReference[oaicite:7]{index=7}

20

21

# 5. SHAP values output for the given sample

22

print("Model prediction:", model.predict(sample)[0])

23

print("Base value (expected output):", explainer.expected_value)

24

print("SHAP values for features:", shap_values[0])

Exposing SHAP Explanations as a RESTful API

Once we have a Python model and the ability to compute SHAP values, the next step is to expose this functionality via a REST API so that a .NET application can consume it. We can create a lightweight web service in Python that listens for requests (containing input features) and returns the model’s prediction and SHAP explanation. You can use either Flask (a minimal WSGI framework) or FastAPI (a modern ASGI framework) to build the API – both are suitable.

Python

1

from flask import Flask, request, jsonify

2

import joblib, numpy as np

3

4

app = Flask(__name__)

5

6

# Load the trained model and initialize SHAP explainer at startup

7

model = joblib.load("model.pkl") # our pre-trained ML model

8

explainer = shap.TreeExplainer(model) # explainer for the loaded model

9

10

@app.route('/explain', methods=['POST'])

11

def explain():

12

# Parse input JSON into a feature array

13

data = request.get_json() # Expecting a JSON object of feature values

14

15

# Convert to 2D array or DataFrame as needed by the model:

16

features = np.array([[data[f] for f in data]]) # 2D array with one row

17

18

# Get model prediction

19

pred = model.predict(features)

20

# you may get predict_proba for probability

21

22

# Compute SHAP values for this input

23

shap_vals = explainer.shap_values(features)

24

25

# Prepare response

26

result = {

27

"prediction": int(pred[0]) if pred.shape else float(pred),

28

"shap_values": {name: float(val)

29

for name, val in zip(data.keys(), shap_vals[0])},

30

"expected_value": float(explainer.expected_value)

31

}

32

return jsonify(result)

33

Consuming the SHAP API from a .NET Backend

With the Python service running (e.g. at http://localhost:5001/explain), the .NET application can request explanations by calling this REST API. In an ASP.NET Core environment, you could create a service or controller method that uses HttpClient to POST the feature data and retrieve the explanation results. Below is an example in C# using HttpClient and the System.Net.Http.Json extensions (available in .NET 5+ for convenient JSON serialization):

C#

1

using System.Net.Http;

2

using System.Net.Http.Json;

3

using System.Threading.Tasks;

4

5

// Define a C# record or class to represent the response structure

6

public record ShapResponse(double ExpectedValue, Dictionary<string, double> ShapValues, int Prediction);

7

8

...

9

// 1. Create an HttpClient (in a real app, reuse a single HttpClient instance)

10

HttpClient httpClient = new HttpClient { BaseAddress = new Uri("http://localhost:5001/") };

11

12

// 2. Prepare input feature data as an anonymous object or defined class

13

var inputData = new { age = 45, income = 50000, gender = 0, balance = 10000 };

14

// (Feature names must match what the API expects. )

15

16

// 3. Send POST request with JSON body

17

HttpResponseMessage response = await httpClient.PostAsJsonAsync("explain", inputData);

18

// Ensure success status

19

response.EnsureSuccessStatusCode();

20

21

// 4. Read and parse the JSON response into our ShapResponse record

22

ShapResponse result = await response.Content.ReadFromJsonAsync<ShapResponse>();

23

24

// 5. Use the result in .NET (for example, log or process the explanation)

25

Console.WriteLine($"Model prediction: {result.Prediction}");

26

Console.WriteLine($"Base expected value: {result.ExpectedValue}");

27

foreach (var kv in result.ShapValues)

28

{

29

Console.WriteLine($"Feature '{kv.Key}' contributes {kv.Value}");

30

}

31

Conclusion

Understanding how AI models make decisions is no longer optional. Whether you’re developing models or using them in real-world applications, being able to explain their behavior helps you catch problems early, build trust with users, and stay accountable. Tools like SHAP give you a clearer view of what’s happening behind the scenes, making complex predictions easier to interpret. In the end, it’s not just about what the model predicts, but why it made that prediction.